Textgain’s security web services countering Daesh propaganda

Textgain is developing new web services for rapid response, including identifying calls-to-violence and suicide announcements on social media. Recently, we have made notable progress with identifying violent speech, specifically in Islamic State tweets (IS/ISIS/ISIL/Daesh).

- AI

- hate speech

In a recent announcement (February 2016), Twitter has spoken out against the use of their microblogging platform to promote terrorism. They reported having suspended over 125,000 profiles for threatening or promoting terrorist acts primarily related to Islamic State.

Twitter remarks: ‘As many experts and other companies have noted, there is no “magic algorithm” for identifying terrorist content on the internet, so global online platforms are forced to make challenging judgement calls based on very limited information and guidance.’ Twitter’s mission is indeed challenging. For every jihadist profile suspended, a new profile appears. Profiles that have not yet been suspended then broadcast the existence of the new profile, and so on, in an endless cat-and-mouse game.

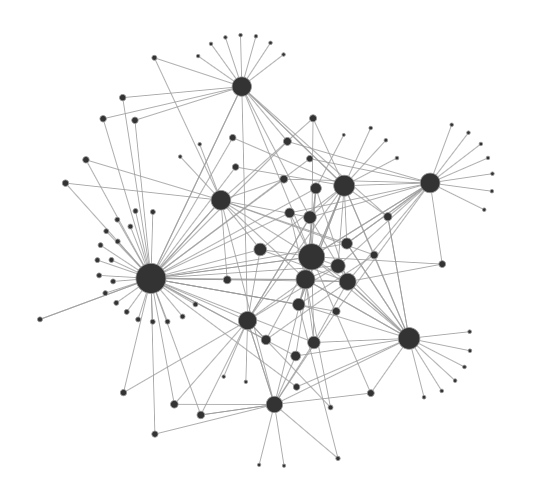

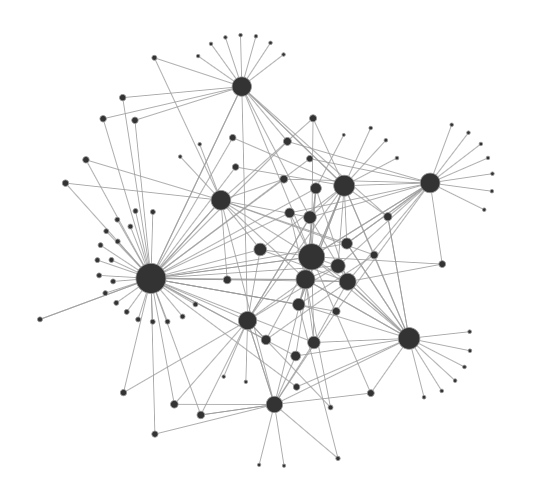

Over the course of several terrorism acts that occurred in the past year, Textgain has developed a proof-of-concept that automatically identifies jihadist content in text and images. Using machine learning and text analytics techniques, we compared jihadist content to mainstream content to predict what features (words, word combinations, …) correlate strongly with jihadist propaganda. For example, out-of-place words such as kafir (infidel, كافر) combined with words such as dog or rage may raise the alarm bell.

The technology fits itself to available data as the rhetoric evolves.

We will share our technology with platforms under distress.

Press coverage [text analytics]

- ‘UA ontwikkelt detectiesoftware haatboodschappen’, Het Journaal, VRT

- ‘Textgain ontwikkelt haatberichtendetector’, De Standaard

- ‘Antwerpse taaltechnologie legt haatberichten bloot’, Laatste Nieuws

- ‘Deze software spoort online haatberichten op’, De Morgen

- ‘Antwerpse taaltechnologie legt haatberichten bloot’, De Morgen

- ‘Nieuwe software scant haatberichten’, VTM Nieuws

- ‘Vlaamse technologie traceert haatberichten’, Het Nieuwsblad

- ‘Nieuwe technologie filtert snel haatberichten’, Radio 2 (► 0:57:30)

- ‘Nieuwe technologie ontcijfert IS-propaganda’, Gazet Van Antwerpen

- Antwerp researchers identify anonymous internet culprits’ , Flanders Today

- Des chercheurs anversois traquent la propagande djihadiste , RTBF

Press coverage [image analysis]

- Antwerps bedrijfje kan verborgen IS-boodschappen opsporen , Gazet Van Antwerpen

- Un algorithme belge pour traquer les djihadistes , L’Echo

- Antwerpse technologie spoort IS-propaganda op , ATV

- Antwerp-developed software to counter IS , Flandersnews.be

- Un logiciel anversois reconnaît la propagande de l’Etat islamique en ligne , RTBF

- Met deze software wil Antwerps bedrijf IS-propaganda opsporen , De Morgen

- Nieuwe technologie uit Antwerpen herkent foto’s van IS-aanhangers , Deredactie.be, VRT

- Phần mềm nhận biết việc tuyên truyền của IS trên mạng , Đầu Báo

- Antwerp start-up’s software detects IS propaganda , Flanders Today

- Nieuwe technologie uit Antwerpen herkent online IS-propaganda , Het Nieuwsblad