Using AI to track online propaganda

Our constant interaction with social media gives propaganda an opportunity to thrive online. At Textgain, we investigate how online platforms are used to spread disinformation and to try and influence public opinion. Our findings are shedding some light on what propaganda looks like in 2025, and the vulnerabilities that it can exploit.

Detecting propaganda narratives

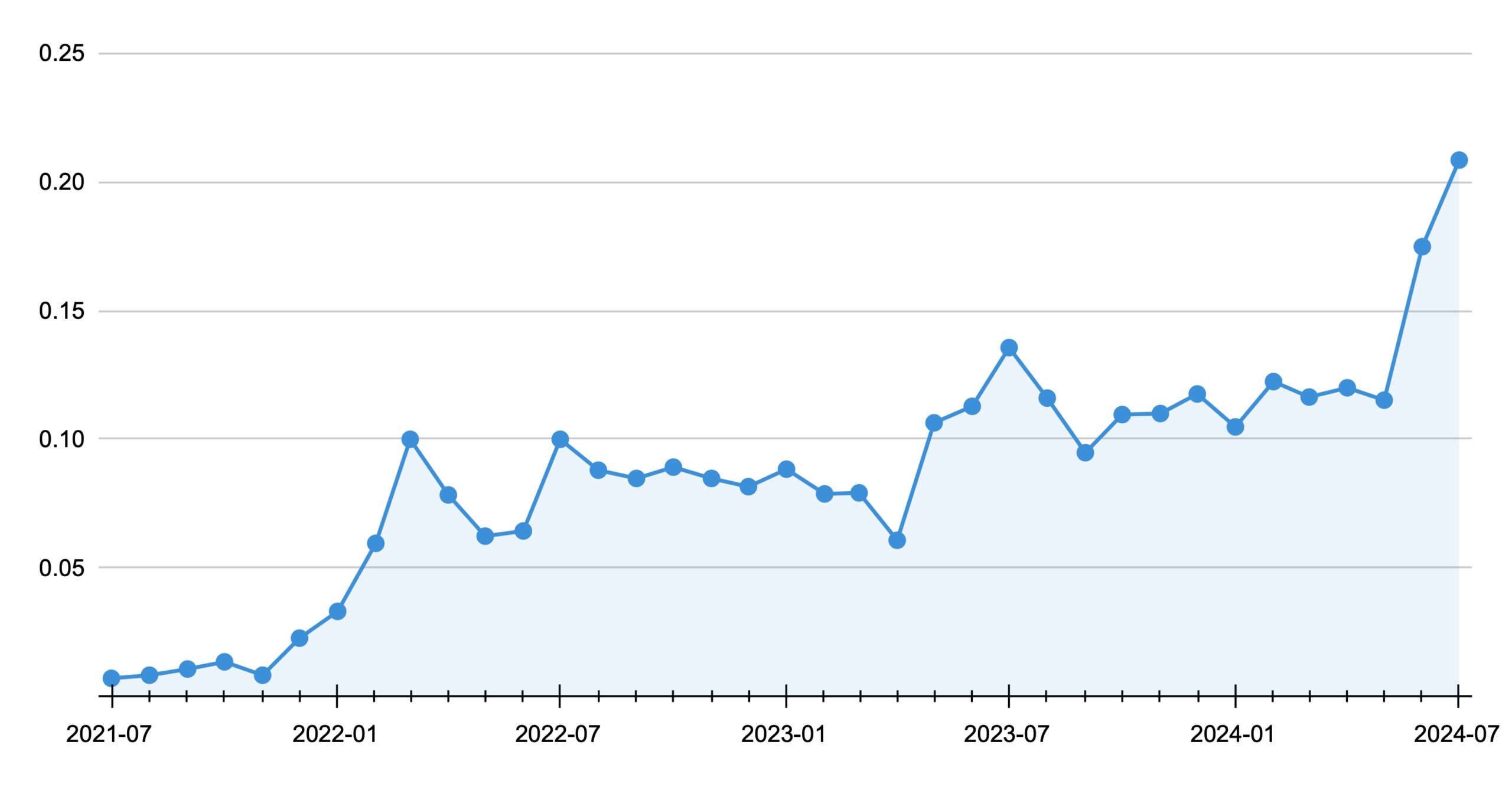

As a starting point, we trained AI to recognize anti-NATO propaganda online. This is a work in progress, but we are already seeing some trends emerge. By examining thousands of social media posts each month between July 2021 and July 2024, we have observed several distinct peaks in propaganda activity. Notably, these spikes have coincided with real-world geopolitical events, such as elections or high-stakes policy decisions.

What was also interesting is the origin of these propaganda narratives. While many originate from foreign sources, others are driven from within the EU. The result is often a flood of coordinated disinformation that seeks to manipulate public perception on a large scale.

Fig 1: Estimated percentage of online propaganda between July 2021 and July 2024

When cognitive warfare meets human psychology

Cyber warfare is evolving rapidly and propaganda online exploits our natural tendency to trust what we read. It also taps cleverly into emotions like fear and anger to influence people’s beliefs. Today, anyone anywhere can attempt to try and influence your thoughts and this kind of threat cannot be addressed without proper detection. That’s why we’re focused on building AI models capable of identifying disinformation campaigns. These tools allow us to track the spread of harmful narratives and flag emerging threats in real time. Online manipulation is a powerful tool that has the potential to impact everything from politics to global security. By investing our time and expertise in detecting adversarial propaganda, we aim to help limit its reach and mitigate its consequences.